Machine Learning: Supervised Learning 1

Supervised Learning is a technique in which we train the computer using labeled data which means that some data is already tagged in with the correct answers.

We will be implementing these algorithms in python.

Here are the steps you need to follow to set up your computer for machine learning. If you don't want to go for deep learning right now you can skip step 4,5,6.

The first algorithm we are going to see is:

Linear Regression

Regression deals with predicting continuous values. It helps to estimate target values such as prices, temperature, population, etc. Linear regression is the simplest form of regression.

The predictions that this model will provide in the future will all fall on the regression line that is formed during the training.

Let's take an example of predicting income. So, our input data (X) will contain features (columns of information). These features can be numerical (eg. Years of Experience) or categorical (eg. Role).

We'll need many training observations for our model to give good predictions.

The basic mathematical function for regression is:

When we perform Linear Regression using multiple features, it is known as Multiple Linear Regression.

We cannot visualize more than 3 dimensions directly, so we cannot plot it into a graph.

To get the best fitting line we update the values of the "β" .

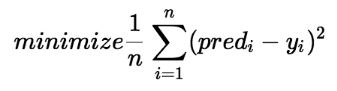

By achieving the best fitting line the model tries to minimize the difference between the true value of Y and the predicted value. It is achieved by a Cost Function.

It is the Root Mean Squared Error between the predicted Y and the true Y.

In Gradient Descent optimization we achieve the best fitting line by starting with random values of "β" and then iteratively updating the values till it reaches the minimum cost.

Linear Regression is most of the time, not a feasible option to predict values so we have Polynomial Regression functions which help us to do better predictions.

We use the ScikitLearn library in python to build Linear Regression Models.

THANK YOU FOR READING :).

Comments

Post a Comment